Mastering Apache Airflow: Orchestrating Scalable and Efficient Data Workflows

A Somewhat Comprehensive Guide to Task Management, Operators, and Advanced Features in Airflow

Introduction

Apache Airflow and I go way back. We've spent long nights together—me writing DAGs, it quietly scheduling things behind the scenes, both of us failing jobs and pretending nothing happened. Over the years, I've used Airflow quite often: it became like almost muscle memory, for some specifc works.

Things like writing a task, setting some dependencies, slapping a retry policy on it—and boom: pipelines magically happen. Autopilot mode engaged.

But recently, I had a moment of pause (a failed DAG run at 2AM tends to do that). I realized I’d been coasting. I knew how to use Airflow, but I couldn’t clearly explain why it works the way it does—or how it actually holds together under the hood. It was time to revisit the tool that runs nearly half of modern data engineering and understand it not just as a user, but as a curious engineer.

So, this isn’t a “how to write your first DAG” tutorial (we’re past that point—plus, the official docs have you covered). This is more like a guided backstage tour of Airflow—its internals, architecture, and some powerful features you might not be using enough.

Bring your own DAGs—we’re diving into the system that orchestrates orchestration.

The Real Beginning

Apache Airflow is an open-source workflow orchestration platform that helps engineers programmatically author, schedule, and monitor data pipelines. It was born at Airbnb in October 2014, at a time when the company’s data workflows were becoming too chaotic for spreadsheets and cron jobs to handle.

Maxime Beauchemin—who also created Superset—led the charge. He designed Airflow around three core principles: flexibility, observability, and a deep commitment to code-first workflows.

From the start, Airflow adopted a "configuration as code" philosophy. But instead of leaning on verbose YAML files, obscure XML trees, or some custom DSL from the seventh circle of parser hell, it embraced Python—plain and powerful. That decision unlocked a new level of extensibility: users could import libraries, write conditional logic, define dynamic DAGs, and treat pipelines like any other Python artifact—testable, composable, and version-controlled.

Airbnb open-sourced Airflow not long after its internal debut. It entered the Apache Incubator in 2016 and graduated as a top-level Apache project by 2019. Since then, it’s become the orchestrator of choice for thousands of data teams around the world.

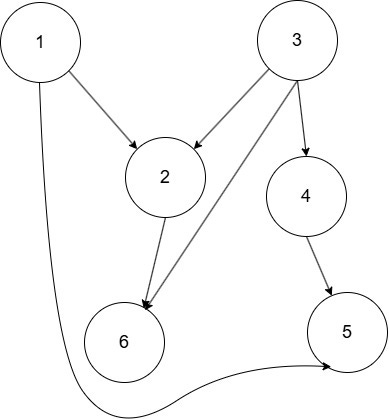

At the heart of Airflow is the DAG—a Directed Acyclic Graph. Think of it as a roadmap of your workflow: each node is a task (say, fetching data or running a SQL query), and each edge represents a dependency (like "only run this after that finishes"). But these DAGs aren't just visual—they're actual Python objects, letting you build, inspect, and test your pipelines with full programmatic control.

Under the hood, Airflow’s pluggable architecture makes it extremely modular. You’ve got operators that define what tasks do, sensors that wait for conditions, hooks that manage connections, and executors that run your tasks across everything from local threads to Kubernetes pods. Whether you’re firing off a Spark job, syncing with an API, or executing a dbt model, Airflow likely has an operator—or a provider package—for it.

While the core platform can be self-hosted, the ecosystem now includes fully managed solutions like Amazon MWAA, Google Cloud Composer, and Astronomer, making it easier to scale workflows without worrying about infrastructure.

Airflow gives you power, visibility, and control over your data workflows—without forcing you into rigid abstractions or proprietary pipelines. It's not without its quirks (we’ll get to those later), but it remains one of the most battle-tested, production-grade tools in the modern data stack.

Why Airflow?

Orchestrating an end-to-end data pipeline is harder than it sounds.

You’re not just writing scripts—you’re managing schedules, dependencies, retries, failures, alerts, and historical tracking. It’s easy for a simple job to grow into a fragile Rube Goldberg machine if you're not careful.

When should we trigger the extraction from a third-party API?

How do we sequence that with downstream transformations?

What happens if the job fails halfway? Can we retry? Can we backfill?

Airflow solves these questions by turning workflows into code. You define your DAGs in Python, annotate them with scheduling metadata, and let Airflow take care of execution, retries, state tracking, and observability. The platform handles everything from triggering jobs at specific intervals to visualizing your workflow status in a clean, real-time web UI.

In the next sections, we’ll break down how Airflow actually works—its architecture, core components, and some of the best patterns for building robust pipelines.

🧭 DAGs and Control Flow

At the foundation of every Apache Airflow workflow is the DAG—a Directed Acyclic Graph. It defines the control flow: what tasks need to run, in what order, and under what conditions. Unlike many workflow systems that rely on static configurations or visual tools, Airflow DAGs are written in Python, giving you the full expressive power of a programming language to define dynamic, modular, and scalable pipelines.

Each DAG is composed of:

Tasks – individual units of work (e.g., calling an API, loading a dataset, transforming data).

Dependencies – the relationships between tasks that define execution order.

Types of Control Flow

1. Linear Execution

Tasks can be chained in a strict sequence. For example, task A runs first, followed by B, then C.

2. Parallel Execution

A single task can fan out into multiple downstream tasks. This enables tasks B and C to run simultaneously after A completes, improving throughput for independent steps.

3. Conditional Branching

Using conditional logic, a task can direct the workflow down different paths at runtime. This is typically done with branching operators that evaluate conditions and determine which downstream task(s) to execute.

4. Dynamic Task Generation

Airflow supports generating tasks dynamically during DAG execution. For example, you might loop through a list of files or dates and spin up a task for each one. This is especially useful when the number of tasks isn't known at DAG definition time.

The “Acyclic” Rule

Airflow enforces a key property of DAGs: they must be acyclic. In other words, there can be no circular dependencies. This ensures that execution flow is clear, deterministic, and free from infinite loops or ambiguous scheduling paths.

The Power of Python

Since DAGs are written in Python, you’re not confined to a rigid template or static structure. You can:

Generate tasks dynamically using functions, loops, or configurations

Create reusable workflow patterns and templates

Integrate business logic directly into your scheduling layer

Maintain workflows in version control alongside application code

This design—treating orchestration as code—makes Airflow uniquely flexible for modern data engineering workflows.

💡Tasks and Operators in Apache Airflow

In Apache Airflow, tasks and operators are crucial concepts that form the building blocks of workflows previously defined by Directed Acyclic Graphs (DAGs). While the terms "task" and "operator" are sometimes used interchangeably, they represent distinct components in the Airflow framework.

Operators: The Templates for Tasks

An Operator in Airflow is essentially a reusable template for a task. Operators are pre-defined classes that represent a unit of work in a DAG. You can think of an operator as a blueprint that encapsulates the logic needed to perform a specific action, such as running a Bash command, executing Python code, or sending an email.

Airflow provides a vast set of core operators, with more available through community provider packages. Some of the most commonly used core operators include:

BashOperator: Executes a bash command.

PythonOperator: Calls an arbitrary Python function.

EmailOperator: Sends an email.

An operator is instantiated and then used to create a task within a DAG. For example, here's how to use the HttpOperator to make a request to a given endpoint:

from airflow.providers.http.operators.http import HttpOperator

with DAG("my_dag", start_date=pendulum.now()) as dag:

ping = HttpOperator(

task_id="ping_task",

endpoint="http://example.com/update/",

)

The operator takes care of the logic for making the HTTP request. You just need to provide the necessary arguments like the endpoint URL.

Tasks: Units of Execution

A Task represents a single unit of execution within a DAG. It is an instance of an operator, meaning each task is defined using an operator and its associated parameters. When you instantiate an operator, you're creating a task that can be added to your DAG.

from airflow.operators.python import PythonOperator

def process_data():

return "Processed data!"

with DAG("my_dag", start_date=pendulum.now()) as dag:

task = PythonOperator(

task_id="process_task",

python_callable=process_data,

)In this case, the PythonOperator is used to create a task that calls the process_data function. The task is then executed according to the DAG's schedule and dependencies.

The Relationship Between Tasks and Operators

Operators are templates for tasks. They contain predefined logic for executing specific actions.

Tasks are instances of operators. They are the actual units of work within a DAG that execute the operator’s logic.

For example, if you use the BashOperator, you can create multiple tasks, each executing a different bash command. The operator itself is reusable, but each task can be configured with its own parameters.

task1 = BashOperator(

task_id="bash_task_1",

bash_command="echo 'Task 1 executed'",

dag=dag,

)

task2 = BashOperator(

task_id="bash_task_2",

bash_command="echo 'Task 2 executed'",

dag=dag,

)

task1 >> task2 # task1 runs before task2The @task Decorator

Airflow 2.0 introduced the @task decorator, which allows you to define Python functions as tasks in a more readable and Pythonic way. It simplifies the process of turning Python code into a task without needing to explicitly use the PythonOperator.

from airflow.decorators import task

@task

def my_python_task():

return "Task completed!"

with DAG("my_dag", start_date=pendulum.now()) as dag:

my_task = my_python_task()The @task decorator automatically handles the conversion of the function into a task, and you don’t need to manually define an operator. It's particularly useful for simpler tasks that don’t require Jinja templating or other advanced configurations.

Templating with Jinja

Airflow allows the use of Jinja templating to dynamically generate content for tasks. This is particularly useful when you need to pass dynamic parameters to a task at runtime.

For instance, you can use Jinja templates in operator parameters to render values dynamically:

from airflow.operators.bash import BashOperator

with DAG("my_dag", start_date=pendulum.now()) as dag:

bash_task = BashOperator(

task_id="templated_bash_task",

bash_command="echo 'The data interval start is {{ ds }}'",

)In this example, the {{ ds }} template variable will be replaced with the start date of the DAG's schedule, allowing for dynamic customization of the task's parameters based on runtime values.

Handling Complex Template Logic

While Jinja is the primary tool for dynamic template rendering in Airflow, there are situations where using a callable for more complex logic is more suitable. If you need to generate a value dynamically based on more complex processing, you can define a function and use it with the operator.

def build_complex_command(context, jinja_env):

return "echo 'Running complex logic!'"

t = BashOperator(

task_id="complex_task",

bash_command=build_complex_command,

dag=dag,

)In this case, the callable function build_complex_command receives the execution context and Jinja environment as arguments and returns the command to execute.

Reserved Params in Airflow

In Airflow 2.2.0 and later, the params keyword is reserved for DAG serialization and should not be used directly in third-party operators to avoid conflicts during DAG serialization. If you encounter errors related to this, it's advised to rename any custom parameters that might conflict.

Essentially, Operators provide reusable templates for tasks, while tasks are the concrete instances that perform the work. With features like Jinja templating and the @task decorator, you can further customize your workflows to suit your needs, leveraging dynamic behavior and Pythonic simplicity.

TaskFlow in Apache Airflow 🌊

Introduced in Airflow 2.0, the TaskFlow API simplifies DAG authoring by using Python functions as tasks, removing the need for extra boilerplate code. With TaskFlow, you can define clean, easy-to-read DAGs using Python's @task decorator, and Airflow automatically handles passing inputs and outputs between tasks using XComs.

Key Features of TaskFlow

Automatic XCom Management : TaskFlow automatically handles the transfer of data between tasks via XComs. When you call a function decorated with

@task, the return value becomes an XComArg, which you can use as inputs for downstream tasks. This eliminates the need to manually manage XComs and simplifies task dependencies.Example:

from airflow.decorators import task

from airflow.operators.email import EmailOperator

@task

def get_ip():

return my_ip_service.get_main_ip()

@task(multiple_outputs=True)

def compose_email(external_ip):

return {

'subject': f'Server connected from {external_ip}',

'body': f'Your server executing Airflow is connected from the external IP {external_ip}'

}

email_info = compose_email(get_ip())

EmailOperator(

task_id='send_email_notification',

to='example@example.com',

subject=email_info['subject'],

html_content=email_info['body']

)In this (a bit long) example:

get_ip()returns an IP address, which is automatically passed as an argument tocompose_email().compose_email()generates the email content, and theEmailOperatoruses this information to send the email.

TaskFlow automatically infers the dependencies between tasks: compose_email is downstream of get_ip(), and send_email_notification is downstream of compose_email.

Simplified Code with @task 🎯: The

@taskdecorator simplifies task creation. Instead of manually usingPythonOperator, you can directly define Python functions as tasks. You can even use plain values or variables as inputs to these functions.

@task

def hello_name(name: str):

print(f'Hello {name}!')

hello_name('Airflow users')

Access to Airflow Context Variables : You can access Airflow's context variables (such as

task_instance,dag_run, etc.) within your task functions. These context variables provide useful metadata about the task's execution state.

from airflow.models.taskinstance import TaskInstance

from airflow.models.dagrun import DagRun

@task

def print_ti_info(task_instance: TaskInstance | None = None, dag_run: DagRun | None = None):

print(f"Run ID: {task_instance.run_id}") # Run ID: scheduled__2023-08-09T00:00:00+00:00

print(f"Duration: {task_instance.duration}") # Duration: 0.972019

print(f"DAG Run queued at: {dag_run.queued_at}") # 2023-08-10 00:00:01+02:20

Logging in TaskFlow : Logging within tasks is easy: you can import Python’s standard logging library and use it to log messages.

Here’s an example:

import logging

logger = logging.getLogger("airflow.task")

@task

def process_data():

logger.info("Data processing started.")

# Task logic here

logger.info("Data processing completed.")

Passing Arbitrary Objects as Arguments : TaskFlow supports passing custom objects as task arguments. Airflow uses XCom serialization to pass variables between tasks. You can pass objects that are decorated with

@dataclassor@attr.define, or implement custom serialization methods for your classes.Below I provided an example of passing a Dataset:

from airflow import Dataset

from airflow.decorators import dag, task

SRC = Dataset("https://data-source-uri.com")

@dag(schedule="@daily")

def etl():

@task

def retrieve(src: Dataset) -> dict:

# Task logic here

return {}

Additionally, Dataset objects can auto-register as inlets and outlets in the TaskFlow pipeline, making data tracking and management seamless.

Custom Serialization: If you need more control over object serialization, you can implement a custom

serialize()method and adeserialize()static method to manage how custom objects are passed between tasks.Sensors with TaskFlow: Sensors can also be written using TaskFlow, allowing you to wait for certain events (e.g., file availability) before proceeding with the execution of downstream tasks. This approach simplifies sensor management in the DAG.

TaskFlow with Datasets

A great feature introduced in Airflow 2.5.0 is the ability to use Dataset objects in TaskFlow. These are used to track the flow of data through your tasks and provide easy integration with external data sources.

from airflow import Dataset

from airflow.decorators import dag, task

SRC = Dataset("https://data-source-uri.com")

@dag(schedule="@daily")

def etl():

@task

def retrieve(src: Dataset) -> dict:

# Retrieve data from the source

return {}

@task

def process_data(data: dict) -> dict:

# Process the data

return {}

data = retrieve(SRC)

processed_data = process_data(data)

This example demonstrates how Datasets allow for easier management of data through the DAG pipeline.

Sensors in Apache Airflow ⏳

Sensors in Apache Airflow are a special type of operator designed to wait for a specific event to occur before allowing downstream tasks to execute. They are particularly useful when you need to pause the execution of a DAG until a certain condition is met, such as waiting for a file to appear, a time condition, or an external event.

Types of Sensors

Sensors have two main modes of operation, allowing you to manage their resource usage efficiently:

Poke Mode (default) : In this mode, the sensor continuously checks for the condition at regular intervals. The sensor occupies a worker slot for the entire runtime, making it more resource-intensive.

Example: A sensor might check for the presence of a file every second, which would use a worker slot consistently until the file is found.

Reschedule Mode : This mode is more efficient as the sensor only uses a worker slot when it checks the condition. It will sleep for a configured duration between checks, thus freeing up the worker slot in between. This mode is best suited for cases where the sensor checks for an event infrequently, such as once per minute or even longer.

Example: A sensor checking for a file every minute would reschedule itself, releasing the worker slot for most of the time.

Example of a Sensor in Action

Here’s an example of using a FileSensor to wait for a file to appear:

from airflow.providers.filesystem.sensors import FileSensor

t = FileSensor(

task_id="wait_for_file",

filepath="/tmp/my_file.txt", # Path to the file to be monitored

poke_interval=10, # Check every 10 seconds

timeout=600, # Give up after 10 minutes if file is not found

mode="poke", # Use poke mode

dag=dag,

)

Pre-built Sensors in Airflow

Airflow includes many pre-built sensors in its core and provider packages, such as:

FileSensor : Waits for a file to appear in a specified location.

TimeSensor : Waits until a specified time.

ExternalTaskSensor : Waits for a task in another DAG to complete.

HttpSensor : Waits for a specific HTTP endpoint to return a desired response.

Deferrable Sensors

Starting in Airflow 2.0, sensors can also be deferrable, which means they can release the worker slot and defer their execution until the condition is met. This is a highly efficient way of handling sensors, as it minimizes resource usage.

For instance, you might use a DeferrableFileSensor to wait for a file, but instead of holding a worker slot, the sensor will defer and re-schedule the task once the file is found.

Sensors are a critical tool in Apache Airflow when building workflows that depend on external events or conditions. By understanding and configuring their modes (poke vs. reschedule), you can balance efficiency and latency, ensuring your workflows run smoothly and efficiently.

🏁 Conclusion

Apache Airflow has evolved into a robust orchestration platform, offering much more than just task scheduling. By allowing the definition of DAGs as code, it brings flexibility and transparency to data workflows. Through powerful abstractions like Operators, Sensors, and the TaskFlow API, Airflow simplifies complex task dependencies, data transfer (via XComs), and event-driven orchestration. The TaskFlow API, with its seamless handling of Python functions as tasks, reduces boilerplate and improves readability, while features like Sensors and Custom Serialization provide granular control over execution logic and data management.

Airflow’s flexibility in deployment—whether on-premise, in the cloud, or in hybrid environments—coupled with support for modern architectures, makes it an indispensable tool for scaling and managing data pipelines across a variety of domains, including ETL, ML, and event-driven workflows.

Mastering Airflow not only unlocks significant productivity and stability for engineering teams but also empowers them to build highly maintainable, efficient, and scalable workflows. Whether you're orchestrating simple tasks or complex data processing pipelines, Airflow remains a cornerstone of modern data infrastructure, enabling innovation and optimization in the fast-evolving world of data engineering.